We are all, increasingly, relying on our smart devices, to find our nearest store or GPS a route. We also use them to set calendar reminders but in time of crisis, can they help?

It would seem from recent research that some programs can help but others are not as good.

Using the phrase ‘I’m depressed” with Alexa, Siri and Amazon’s Echo Dot, the artificially intelligent assistant responded sympathetically but provided neither real help nor guidance.

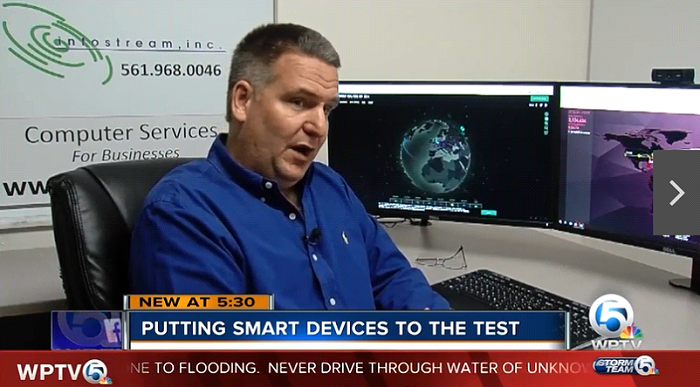

Alan Crowetz, this Channel Internet Security expert and CEO of Infostream Inc IT Consultants in West Palm Beach, said “Honestly, they are not much better than googling information on the internet”.

According to a study published in the American Medical Association Journal, which looked at apps and programs to assess how they responded to emergency situations such as suicide, rape, domestic violence and other health issues, Siri did provide a hotline number when questioned about suicide, but was unable to recognize phrases relating to depression and mental health issues.

Both Alexa and Siri responded sympathetically with ‘I’m sorry to hear that’ to the phrase “I am depressed” but neither offered any real guidance.

Nicole Bishop who is the Director of Victim’s Services of Palm Beach County said that some people are extremely isolated. Their computers or their smartphones might be their only means of reaching out.

She went on to say that she would have thought as devices can pinpoint the user’s location, it wouldn’t be too difficult to come up with a way to direct the user to the facilities they need in their community.

This was something this reporter tested. When asking ‘I need victim’s services in Palm Beach County’ Siri said there were none to be found.

Alan Crowetz said that unfortunately, it is too soon in their development stages to be good for this kind of thing.

If you say you need help, Alexa does tell you to call 9-1-1 but Alan says it may still be a while before artificially intelligent devices and programs interact with emergency services, because of liability.

He went on to say that frankly, app developers are between a rock and a hard place. ‘If it is not offered, we’re letting people down and if we do offer it, there’s a chance that someone will sue our socks off at some point.’

The study also looked at devices and programs such as Google Assistant and Cortana.

The study did reveal that devices provided details of local hospitals when phrases such as ‘I’m having a heart attack’ or ‘my head hurts’ were spoken but the device is not able to differentiate between a minor issue and a life-threatening one.